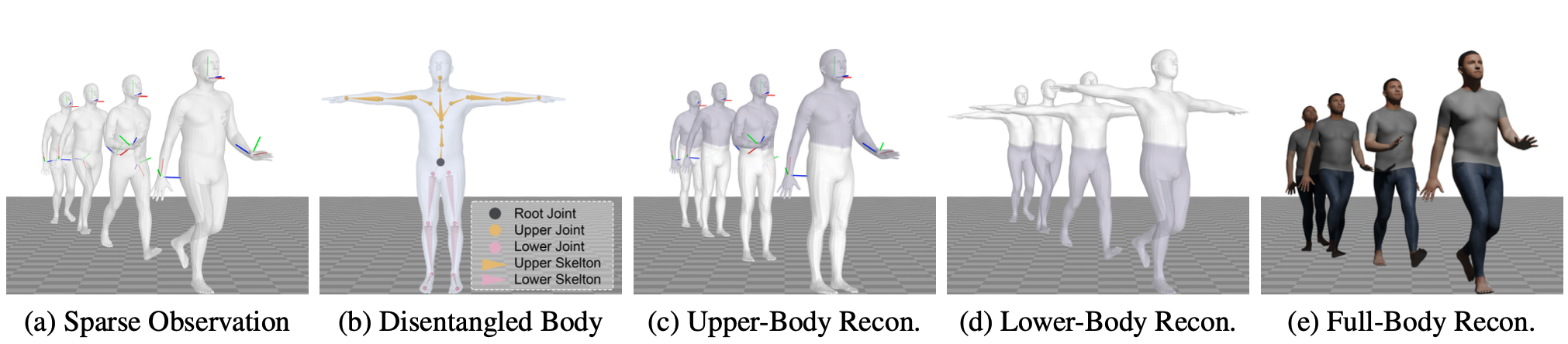

Stratified avatar generation from sparse observations. Given the sensory sparse observation of the body

motion: 6-DoF poses of the head and hand marked by RGB axes in (a), our method leverages a disentangled body

representation in (b) to reconstruct the upper-body conditioned on the sparse observation in (c), and

lower-body conditioned on the upper-body reconstruction in (d) to accomplish the full-body reconstruction in

(e).

Stratified avatar generation from sparse observations. Given the sensory sparse observation of the body

motion: 6-DoF poses of the head and hand marked by RGB axes in (a), our method leverages a disentangled body

representation in (b) to reconstruct the upper-body conditioned on the sparse observation in (c), and

lower-body conditioned on the upper-body reconstruction in (d) to accomplish the full-body reconstruction in

(e).